What is TensorFlow?

TensorFlow is a powerful, open-source software library designed for machine learning and artificial intelligence. It was originally developed by the Google Brain team. TensorFlow was developed using a combination of programming languages:

- C++ provides the speed and flexibility needed for numerical computation and optimized tensor operations.

- Python acts as a bridge to the underlying C++ code.

- CUDA (for GPU acceleration) to execute operations on NVIDIA GPUs. It is a software layer that gives direct access to the GPU’s virtual instruction set and parallel computational elements for the execution of compute kernels.

- JavaScript (for TensorFlow.js) to bring machine learning to the web. It is designed to run machine learning models directly in a web browser or Node.js environment.

- Swift (experimental). TensorFlow Swift is an experimental project aimed at bringing TensorFlow’s capabilities to Swift.

For my project, I used ml5.js, a library built on top of TensorFlow.js.

What is a Tensor?

Tensors are multi-dimensional arrays with a consistent data type (called a dtype, such as float64, int16, or bool). Tensors are immutable, meaning their content cannot be modified after creation—you would need to create a new tensor to hold different data.

Tensor Basics

- Scalar (rank-0 tensor)

A scalar tensor contains a single value and has no dimensions or axes. For example, a temperature reading is a scalar value. - Vector (rank-1 tensor)

A vector is a one-dimensional array of numbers. Each value can be located using its position (index) in the array. For instance, a vector could represent the ages of your students. - Matrix (rank-2 tensor)

A matrix is a two-dimensional array where elements are identified by two indices. Matrices are widely used in applications like image processing. - Higher-rank Tensors

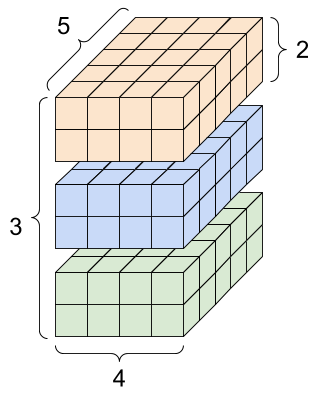

Tensors with more than two dimensions can be thought of as multi-dimensional grids. For example, a rank-4 tensor with the shape[3, 2, 4, 5]represents a grid with 4 axes. This visualization of a rank-4 tensor is from TensorFlow’s documentation.

Tensor Shapes

A tensor’s shape is defined by the number of elements (or length) along each of its axes. For instance, a tensor with shape [3, 2, 4, 5] has:

- 3 elements along the first axis,

- 2 elements along the second,

- 4 elements along the third,

- 5 elements along the fourth.

How do we use this in Real Life: Example. Video Processing

Imagine you’re analyzing video frames to detect objects, and you have a dataset of three short video clips. Each clip contains 2 frames, and each frame is a grayscale image represented as a grid of pixel intensities.

Explanation of the tensor shape [3,2,4,5]:

- 3 (clips): The tensor contains data for 3 video clips.

- 2 (frames): Each video clip has 2 frames (images).

- 4 (height): Each frame is a grayscale image with 4 rows of pixels (height).

- 5 (width): Each frame has 5 columns of pixels (width).

Example Tensor:

A tensor of shape [3,2,4,5] could look like this:

Javascript:

// Import TensorFlow.js

const tf = require('@tensorflow/tfjs');

// Create a tensor with shape [3, 2, 4, 5]

const tensor = tf.tensor([

[

[

[0, 1, 2, 3, 4],

[5, 6, 7, 8, 9],

[10, 11, 12, 13, 14],

[15, 16, 17, 18, 19]

],

[

[20, 21, 22, 23, 24],

[25, 26, 27, 28, 29],

[30, 31, 32, 33, 34],

[35, 36, 37, 38, 39]

]

],

[

[

[40, 41, 42, 43, 44],

[45, 46, 47, 48, 49],

[50, 51, 52, 53, 54],

[55, 56, 57, 58, 59]

],

[

[60, 61, 62, 63, 64],

[65, 66, 67, 68, 69],

[70, 71, 72, 73, 74],

[75, 76, 77, 78, 79]

]

],

[

[

[80, 81, 82, 83, 84],

[85, 86, 87, 88, 89],

[90, 91, 92, 93, 94],

[95, 96, 97, 98, 99]

],

[

[100, 101, 102, 103, 104],

[105, 106, 107, 108, 109],

[110, 111, 112, 113, 114],

[115, 116, 117, 118, 119]

]

]

]);

console.log("Tensor Shape:", tensor.shape); // Output: [3, 2, 4, 5]

console.log("Tensor Data:");

tensor.print(); // Pretty-print the tensor

// Example: Access the first clip, first frame

const firstFrame = tensor.slice([0, 0, 0, 0], [1, 1, 4, 5]);

firstFrame.print();

C++

#include <tensorflow/core/framework/tensor.h>

#include <tensorflow/core/platform/env.h>

#include <iostream>

int main() {

using namespace tensorflow;

// Create a tensor of shape [3, 2, 4, 5] filled with sequential values

Tensor tensor(DT_FLOAT, TensorShape({3, 2, 4, 5}));

// Fill the tensor with some sample data

auto tensor_map = tensor.tensor<float, 4>();

int value = 0;

// Clips

for (int i = 0; i < 3; ++i) {

// Frames

for (int j = 0; j < 2; ++j) {

// Height

for (int k = 0; k < 4; ++k) {

// Width

for (int l = 0; l < 5; ++l) {

tensor_map(i, j, k, l) = static_cast<float>(value++);

}

}

}

}

// Print the tensor's shape

std::cout << "Tensor Shape: [3, 2, 4, 5]" << std::endl;

// Example: Access and print data from the first clip, first frame

std::cout << "First Clip, First Frame:" << std::endl;

for (int k = 0; k < 4; ++k) {

for (int l = 0; l < 5; ++l) {

std::cout << tensor_map(0, 0, k, l) << " ";

}

std::cout << std::endl;

}

return 0;

}

These examples come from ChatGPT, so thank you!

Real-life Use Case:

- Video Analysis Pipeline:

- Input Data: The tensor stores the pixel values of the grayscale video clips. Each clip consists of frames with spatial dimensions 4×5.

- Processing: You might feed this tensor into a neural network to perform tasks like object detection, motion tracking, or activity recognition.

- Machine Learning Model:

A convolutional neural network (CNN) could take this tensor as input, process the frames, and learn patterns across both the spatial (height and width) and temporal (across frames) dimensions to identify objects or actions within the clips.

And that’s that for now. Next post: Media Pipe Hands, the model I used for my little program.